There is an unavoidable new dynamic unfolding in the contact center space that promises to upend every norm governing operational efficiency, agent performance, and data security.

We are talking about Voice Security. Voice security involves a strategic set of processes, solutions, and training used to protect voice-based communications. These effectively preserve the confidentiality, integrity, and availability of the voice channel.

More specifically, we are seeing in real time how protecting and defending the voice channel has become exponentially more challenging.

Threat agents can now incorporate the power of Generative AI (GenAI) tools to uplevel their effectiveness at manipulation, deceit, and theft.

Ground Zero

Contact centers are at ground zero for voice security, as the voice channel is core to both customer experience (CX) and customer satisfaction…and the voice channel is under attack.

As Jonathan Nelson, director of product management for Hiya predicts, “We should expect a pretty drastic shift in the scam ecosystem and the way scam calls are created within the next couple of years. It’s going to be incredibly fast.”

AI Transforming Voice-Based Fraud

AI technology has, since 2022, extended its reach far beyond copy generation. It is now in the realm of automated task analysis, business plan development, product design, and multimedia synthetic content creation.

A plethora of AI-powered applications has since emerged to meet the needs of businesses anxious to leverage their labor-saving and efficiency-optimizing promises.

Contact centers are integrating GenAI-powered tools and solutions into their platforms to reduce waste, speed customer response, support more personalized service delivery, streamline problem-solving, and harness insights that further enhance customer interactions and service delivery.

But, as is often the case, powerful technology advancement can carry unforeseen risks when falling into the wrong hands.

When it comes to AI-enhanced fraud perpetrated through the voice channel, we see several emerging trends that are profoundly impacting the contact center’s performance and security. These threat trends can be viewed through three lenses:

- The proliferation of attacks

- The potency of attacks

- The profile of attackers

Proliferation of Attacks

We know that customer service-oriented contact centers, with their ready access to account credentials and personal data, are particularly attractive targets for criminal intruders seeking to exploit the human vulnerabilities of live agents.

We also know that the voice channel, in particular, has emerged as a favored conduit for AI-enhanced cyberattack activity.

In its “2024 Voice Intelligence and Security Report”, voice authentication solution provider Pindrop reported that, from 2022 to 2023, the rate of fraudulent calls into contact centers surged by 60% with no abatement in sight.

It’s hard to overlook the fact that this sudden escalation emerged shortly after GenAI synthetic content development applications made their 2022 debut.

Potency of Attacks

Already, 85% of enterprise cybersecurity leaders now say recent cyberattacks are being augmented by GenAI applications.

According to experts monitoring activity on the dark web, cybercriminals are using AI-based tools to:

- Quickly gather intel on high-value targets.

- Identify vulnerabilities in specific organizations for more precise attack targeting.

- Harvest and organize a portfolio of personally identifying information (PII) used to create false identities and defeat traditional caller authentication practices.

- Generate interactive scripts, deepfake images, and cloned voices used to advance criminal deceptions.

- Write detection-evading code for malware.

What’s more, according to a recent report on cybersecurity news site DarkReading, easy access to open-source GenAI technology has spawned an underground factory for bootleg applications unrestricted by laws or regulations. Much of that activity is now centered around applications designed to create artificial content for criminal exploitation.

…phone-based fraudsters…can now attach those [digitally altered phone] numbers to multiple false identities.

There can be little doubt about the intention of applications marketed on the dark web with names such as:

- “EvilProxy” (a kit used by hackers to bypass standard multi-factor authentication [MFA] security protocols).

- “FraudGPT” (a malicious chatbot that not only creates interactive content for phishing/vishing (voice phishing) attacks but also malicious codes for use in ransomware attacks).

For a small subscription fee, any cyberthief wannabe can now be handed a toolkit used to launch sophisticated cyberattack campaigns from their personal computer. No special skills required.

Profile of Attackers

Not only are we seeing new AI-generated threat tactics expanding exponentially, so, too, are its legions of criminal practitioners.

Wide-scale social engineering and vishing campaigns that used to require the expertise of large criminal gangs like REvil, Black Cat, and Scattered Spider, can now be launched by lone individuals leveraging illicit, AI-based hacker toolkits.

While it’s long been commonplace for phone-based fraudsters to hide their identities behind spoofed (digitally altered) phone numbers, they can now attach those numbers to multiple false identities.

And, with just a bit of information about the people they are imitating plus short audio clips lifted from social media, threat agents can use AI models to not only write convincing, interactive scripts that resemble trusted sources, but they can actually sound like those sources.

The value of that to a criminal impostor is immense. And it’s no longer just theoretical.

There are, in fact, already more than 350 GenAI-empowered tools dedicated to voice cloning alone, with each new generation improving on the quality of the last.

Regulators Sound the Alarm

While U.S. federal agencies have taken note, it appears there is little they can do beyond posting advisory alerts.

In an October 2024 Industry Letter addressing statewide financial organizations and affiliates, the New York State Department of Financial Services (NYDFS) warns of the imminent threat posed by criminal agents utilizing the power of AI in their schemes.

The letter details how criminal access to AI applications has amplified the effectiveness, scale, and speed of their cyberattacks, particularly on financial institutions. It also points out that AI-generated audio, video, and text (“deepfakes”) are making it harder to distinguish legitimate customer callers from impostors.

…a voice firewall optimizes the performance of downstream call authentication and fraud detection solutions.

When detailing the most concerning AI-enhanced attack vectors, it’s no surprise that the advisory puts AI-enhanced social engineering schemes and, particularly, voice phishing (vishing) at the top of its list of “most significant threats.”

The Price We Pay

It is notable that, according to survey results compiled by research and analysis firm ContactBabel for the US 2024 Contact Center Decision-Maker’s Guide, an average of 65% of calls to contact centers require caller ID verification (for the financial industry, that percentage rises to 80-90%).

Of those calls, a full 90% require vetting through a live agent. The cost associated with these extensive caller ID verification processes has risen consistently and now tops $12.7 billion industry wide.

Despite continuous improvements in fraud detection applications and practices designed to streamline the caller verification process, the process of confirming caller identity today consumes 65% more time than it did nearly 15 years ago.

This, in large part, is the price we pay to protect the organization and its customers against ever-increasing, ever-more sophisticated cyberattack threats.

A Strategy Reset: A Focus on the Call Flow

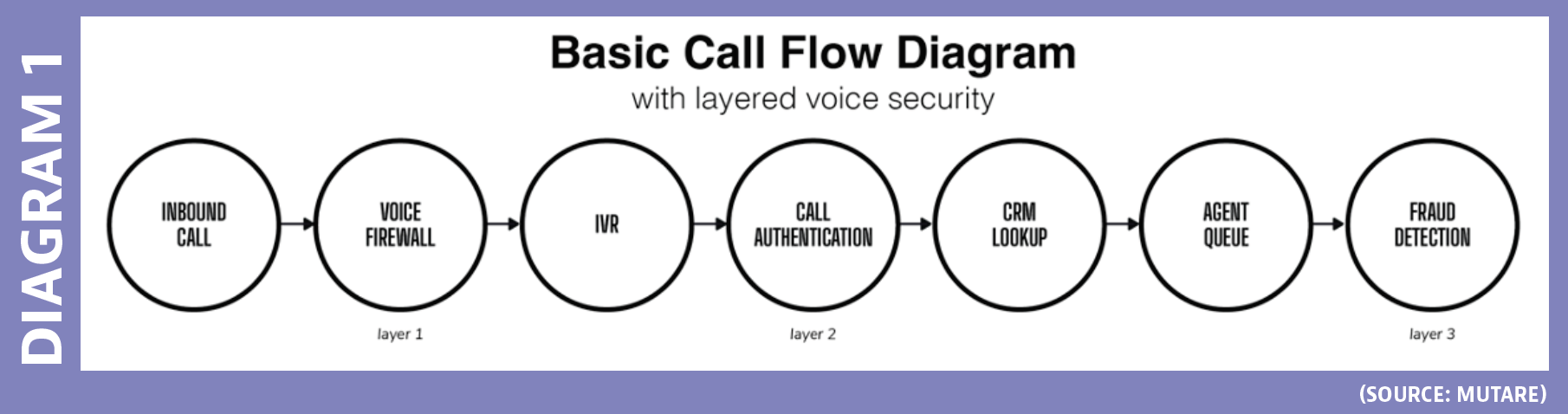

When architecting an enterprise-class, cost effective, resilient voice defense, the call flow must take center stage. From call inception through agent interaction, industry best practices emphasize the need for a multi-layered approach. This diagram (SEE DIAGRAM 1) illustrates the recommended layers of security and where they fit into a typical call flow.

Each layer can be further defined as follows.

Voice Firewall

A voice firewall is a technology-based call filtering system that examines the forensics of call data related to incoming voice traffic at the forefront of the call flow.

Calls that are clearly abusive, disruptive, or unwanted (robocalls, spoofed calls, spam storms) are identified and eliminated before they pass to the IVR. Other calls may require additional caller authentication screening.

Critically, a voice firewall uses advanced analytics and machine learning to identify anomalous behaviors and tell-tale indicators of potential fraud. It can be configured to either remove or reroute those calls deemed suspicious to other resources for additional vetting.

By cleansing incoming call traffic of unwanted calls from the beginning, a voice firewall optimizes the performance of downstream call authentication and fraud detection solutions. And, by reducing live agent interaction with unwanted callers, they substantially reduce the organization’s risk exposure to voice-based threats.

Call Authentication

Call authentication takes place mid-stream along the call flow and refers to a combination of technologies and practices used to confirm that the person calling is actually who they say they are. While a voice firewall examines the call metadata, call authentication examines the caller. Automatic number identification (ANI) validation and CRM lookup are common call authentication technologies.

Modern contact center platforms may also include a biometric technology screening that analyzes callers’ keypad interactions when navigating the IVR system for signs of reconnaissance “probing” indicative of nefarious intent.

Additionally, these systems may “listen” to caller voice responses to IVR prompts and flag those deemed inconsistent. Suspect results may trigger agent desktop alerts or call routing to a specialty resource.

Fraud Detection

Fraud detection is the last line of defense carried out at the agent level. Note that fraud detection screening may be integrated into certain call authentication technologies. Results from prior technology-based screenings may generate alerts on the agent desktop prompting additional action. In general, here are what agent-level fraud detection practices include:

- Knowledge-based authentication (KBA). A traditional security protocol where agents ask the caller to repeat a password or answer a security question.

- Multi-factor authentication (MFA). A step-up from the less-secure KBA protocol, MFA requires proof of identity through multiple and unrelated verification mechanisms. As noted earlier in this article, hacker toolkits are already being developed to defeat MFA screening, so MFA alone is not adequate protection.

- Voice biometrics. There are now sophisticated voice biometrics systems that use voice printing to match a caller’s voice with that of a known customer (or against a database of known scammers). These systems can speed caller authentication or detect a fraud attempt without the added friction of MFA questioning.

- While criminal use of GenAI voice synthesizing applications is creating new challenges for these systems, the more advanced applications are using AI and machine learning algorithms. These enhance sensitivity to stay ahead of evolving threats that leverage synthetic voice.

- In line with privacy laws, most states require customer opt-in for organizations to store personal voice recordings. But we see the use of this technology expanding in the years to come.

- Agent training. Agent training is an essential element for cybersecurity. But, as current events have shown, live humans make poor firewalls against increasingly sophisticated, AI-armed threat agents. Contact center agents should be viewed not as a shield but as one more element of a strong, multi-layered defense.

Final Prediction

While there is no way to fully know what changes lie ahead for voice security in the contact center, one thing is clear.

As negative forces are growing in scale and sophistication, protection of the interconnected processes that keep our organizations operating at optimal levels must include a multi-layered approach to voice cybersecurity. These tap best-in-class practices and technologies that are adaptable, responsive, and work collectively to assure optimal work process efficiency.

In this way, the balancing act that is our ever-present reality will continue to support a positive CX while assuring a high level of security for agents, their customer callers, and the organizations they serve.